Under review: Multi-agent Deep Reinforcement Learning to Improve Dispatch System for Autonomous Trucks

Published in Journal of Intelligent Transportation Systems, 2024

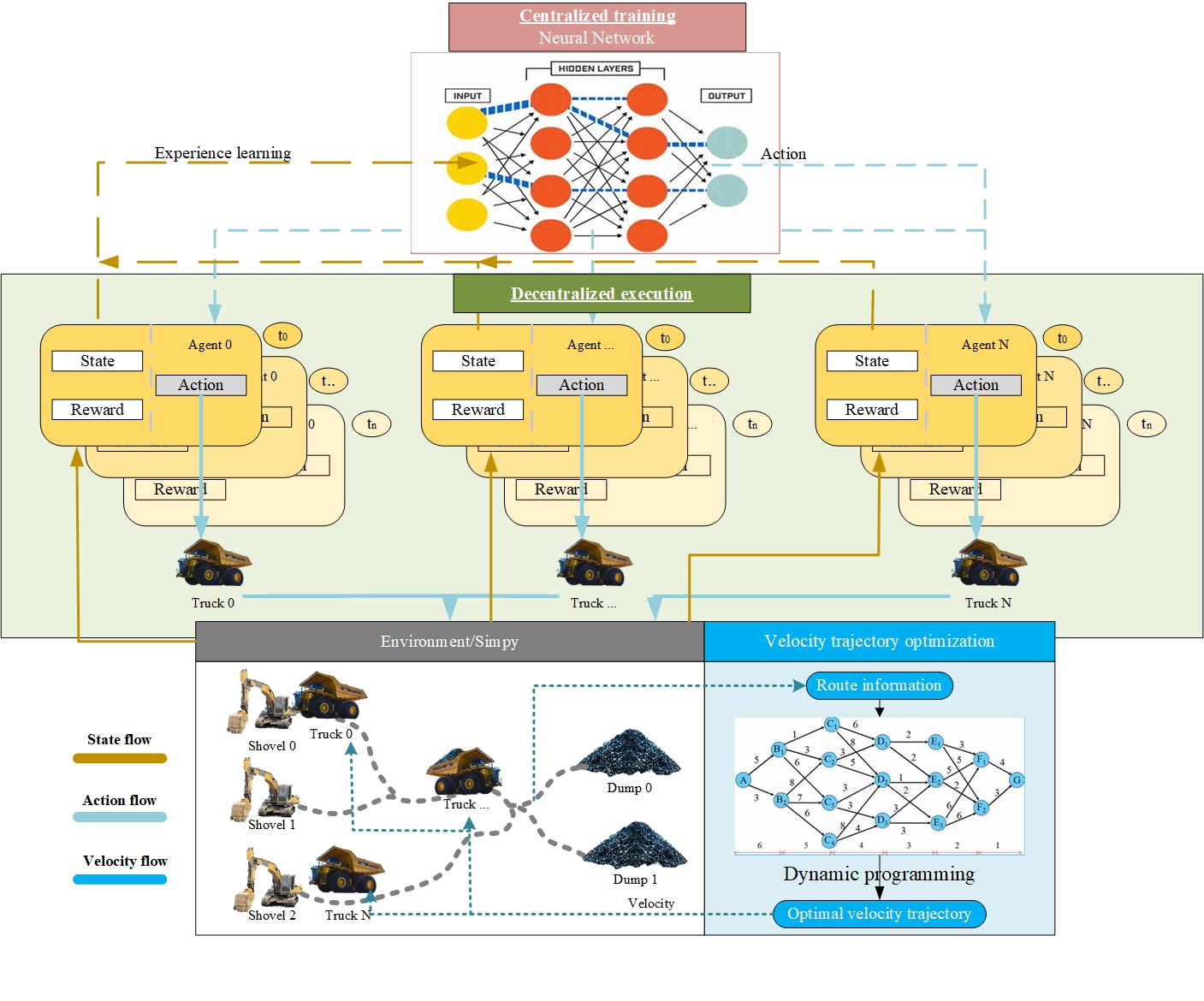

In the domain of mining transportation, conventional scheduling and human controlled approaches often result in diminished efficiency and suboptimal outcomes, encompassing resource wastage, increased energy consumption, and safety risks. Byintegrating Deep Q-Network (DQN), a model-free reinforcement learning (RL) system, with the dynamic programming trajectory optimization method, the efficiency of mining transportation can be enhanced, thereby reducing waiting times and energy consumption. The proposed approach seeks to enhance the fleet’s decision making capabilities pertaining to payload management, queueing duration, and the quantity of trucks in the waiting queue. The approach is valid in the simulator several times. It results in better performance compared to the conventional fixed schedule (FS) and shortest queuing (SQ) strategy. The dispatching policy generated by the DQN algorithm demonstrates more balanced tasks between dump sites and shovel sites. It shows robustness in handling unplanned truck failures.

In the domain of mining transportation, conventional scheduling and human controlled approaches often result in diminished efficiency and suboptimal outcomes, encompassing resource wastage, increased energy consumption, and safety risks. Byintegrating Deep Q-Network (DQN), a model-free reinforcement learning (RL) system, with the dynamic programming trajectory optimization method, the efficiency of mining transportation can be enhanced, thereby reducing waiting times and energy consumption. The proposed approach seeks to enhance the fleet’s decision making capabilities pertaining to payload management, queueing duration, and the quantity of trucks in the waiting queue. The approach is valid in the simulator several times. It results in better performance compared to the conventional fixed schedule (FS) and shortest queuing (SQ) strategy. The dispatching policy generated by the DQN algorithm demonstrates more balanced tasks between dump sites and shovel sites. It shows robustness in handling unplanned truck failures.

Main Contribution

-1. Simulation Platform Development: Mining haulage fuel consumption accounts for over half of the greenhouse gas emissions, highlighting the need for optimized solutions. Developed a fleet dispatch simulation platform using PyTorch and SimPy, modeling mountainous scenarios, including assembly, real-time dynamic scheduling, and resupply.

-2. Multi-Agent Reinforcement Learning System: Each truck is treated as an independent agent with a standardized set of state variables. Integrated Deep Q-Network (DQN), a model-free reinforcement learning system, with dynamic programming optimization methods to enhance transportation efficiency, reducing waiting time and energy consumption.The DQN algorithm enable them to learn and refine dispatching policies by maximizing rewards.

-3. Results and Advantages: The system demonstrated superior adaptability and efficiency compared to traditional methods. Achieved a 10% reduction in energy consumption per kilogram transported and a 3,800-ton increase in production. It quickly adjusts fleet deployment in response to unexpected situations and complex transport environments. The system showed improved task balancing and robustness against truck failures.